Hands-On With “AI Art”

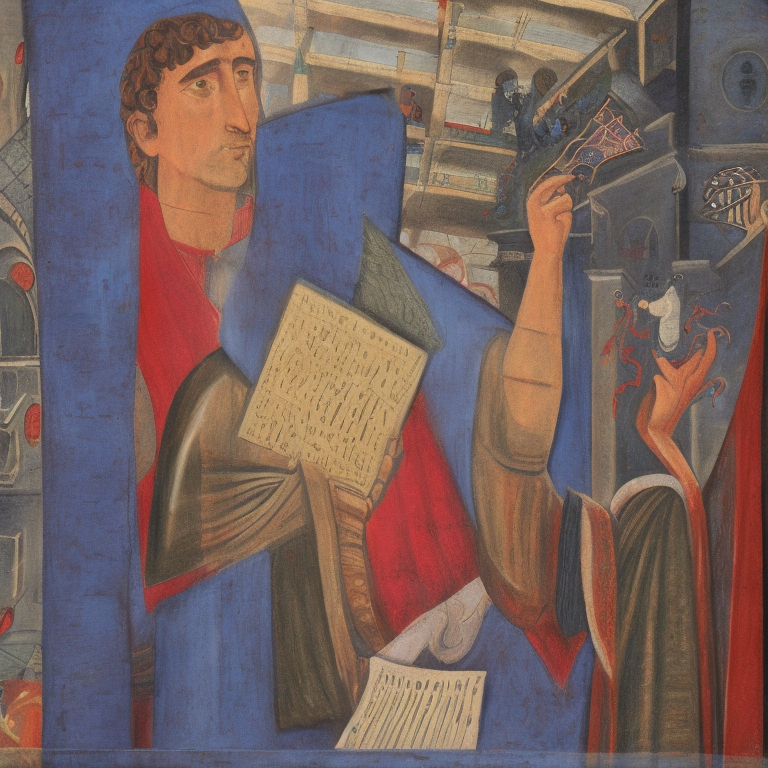

A brief catalog of frustrations This article originally appeared as a forum post on Agora Road. All the illustrations were generated by Stable Diffusion.

I think, the thing to keep in mind is that almost everyone who is talking about AI (or any technology topic) is a total know-nothing. When approaching these topics, I find, that it’s important to be extremely careful because even alleged professionals and experts are, often, know-nothings. What we have, as is the case with almost any technical field, is a relatively small number of producers who might have some high-level understanding of their products and a relatively large number of consumers who use those products with little to know knowledge of their operation. Most of the people who are big AI boosters and evangelists are going to be in the latter camp. It isn’t new or unique. Almost every high-tech trend is like this.

When there was a heated debate over AI art going on in a thread on Agora Road, I decided to download a local copy of Stable Diffusion and try it out I would encourage anyone who disagrees with what I’m about to say to check out SD for themselves first. If you have a PC with a decent graphics card it’s very easy to set up and there are plenty of tutorials available. before sharing my thoughts. I ended up never making that post. Actually using the AI made me realize how wrong many of the assumptions being made by people on both sides of the argument were. The idea that text-to-image AI can spontaneously and effortlessly generate any image you imagine, something that both pro- and anti-AI people seem to take for granted, is just flat-out wrong. Playing around with SD for an hour or so would be enough to convince anyone of this.

First of all, creating and refining text prompts that will work with the AI is inherently a trial and error process. SD prefers comma-separated lists of nouns and adjectives to grammatically correct sentences, and it’s hard to guess which of the “tags” you give it will be prioritized over the others. In theory tags near the front of the prompt get priority over ones near the end, but I kept running into situations where adding new descriptions to the end of a prompt would make SD forget parts of the beginning, or vice versa. This isn’t much of an issue when trying to generate single objects but it becomes a real hassle with more complicated scenes. Inpainting and outpainting allow you to focus only on one part of an image, but because SD doesn’t make any distinction between tags relating to style and tags relating to content, it’s hard to do this without making your image look like a collage. Prompting always seems to involve the butterfly effect at some level and there’s no guarantee that the combination of tags that generated a background in a particular style will do the same thing when applied to a single object in the foreground. One possible solution to all this is to generate a collage-style image by using multiple inpainting prompts and then give the entire image one last stylistic pass to even things out, but trying this always gave me blurry images with an ugly “overcooked” feel to them. The fact that SD can only output 512x512 or 768x768 jpgs without crashing doesn’t help at all.

First of all, creating and refining text prompts that will work with the AI is inherently a trial and error process. SD prefers comma-separated lists of nouns and adjectives to grammatically correct sentences, and it’s hard to guess which of the “tags” you give it will be prioritized over the others. In theory tags near the front of the prompt get priority over ones near the end, but I kept running into situations where adding new descriptions to the end of a prompt would make SD forget parts of the beginning, or vice versa. This isn’t much of an issue when trying to generate single objects but it becomes a real hassle with more complicated scenes. Inpainting and outpainting allow you to focus only on one part of an image, but because SD doesn’t make any distinction between tags relating to style and tags relating to content, it’s hard to do this without making your image look like a collage. Prompting always seems to involve the butterfly effect at some level and there’s no guarantee that the combination of tags that generated a background in a particular style will do the same thing when applied to a single object in the foreground. One possible solution to all this is to generate a collage-style image by using multiple inpainting prompts and then give the entire image one last stylistic pass to even things out, but trying this always gave me blurry images with an ugly “overcooked” feel to them. The fact that SD can only output 512x512 or 768x768 jpgs without crashing doesn’t help at all.

On top of all this is the fact that there are always going to be things that SD just doesn’t want to draw and styles of image that it prefers over others. From what I can tell it will always default to copying either photography or 3DCG unless you explicitly tell it not to. Trying to get it to do painting or drawing is an exercise in futility, especially for the latter. I think “cleaner” styles are harder to do because of the image generation being based on random noise but that’s just a guess. It doesn’t recognize certain nouns or objects (it couldn’t draw a “laurel wreath” no matter how hard I tried) and describing things indirectly has a high probability of confusing it or making it forget other tags in the prompt. There are plenty of forks of SD out there that have been tweaked to specialize in certain styles, but then you run into the opposite problem of the AI wanting to generate the same image every time. If you have a specific image or style in your mind that vanilla SD doesn’t like and that nobody has trained a fork to specialize in, your only real option is to train one yourself.

The point of all this is that creating AI art requires doing a lot of work and making a lot of compromises that you wouldn’t have to worry about if you just sat down with a pencil and drew the image you had in your head. It’s impossible to just “think an image into existence” with Stable Diffusion, and because most of the problems I mentioned above have to do with the general concept of text-to-image AI, I’m not convinced that improving the technology is going to change that. The trial and error nature of prompting and the fact that nobody can predict exactly what the algorithm will come up with mean that there are hard limits to what AI art is capable of on a conceptual level. The following section is a half-baked extrapolation of some ideas I picked up from a photography textbook. I’m not totally happy with how it came out and the subject probably deserves its own article. The best analogy I can think of is the difference between photography and painting. A painter always starts with a blank canvas and adds things to it. He has to create everything in his image from nothing through a process that requires a huge amount of effort technical skill, but if he’s good enough he can create anything he imagines. A photographer starts with a scene and subtracts things from it until only the things he wants remain. He has a machine that can create a crystal-clear and accurate image of anything he wants without much effort on his part, but he will always have to go out into the world, find what he wants to photograph, and then figure out a way to isolate that object or scene from anything else that might be around it (cropping, editing, etc.). Nobody expects a photographer to be able to create the same kind of images that a painter does (despite the fact that if their output will be identical from a technical point of view if they’re working digitally) because the creative processes behind the two mediums are totally different. The same should apply to AI art as well, but nobody seems to have grasped this yet. I would elaborate on how AI art is a “reductive” medium like photography but I’m already way off topic.

After experimenting with SD for a week or so and thinking about all this, all the popular AI art talking points started to sound like they came from people with no real hands-on experience with the existing technology. The idea that AI art was spontaneous and required no human effort, mentioned above, was clearly ridiculous. The popular theory that AI would naturally replace human artists doing graphic design work and other forms of corporate art also became hard for me to believe. Trying to complete a specific time-sensitive request for a paying client using the processes mentioned above would be a complete nightmare, especially if it were something like a logo that required a “clean” style free from random noise. Imagine being an AI artist and telling your boss that despite you pulling an all-nighter your company’s software just wouldn’t generate the graphic he requested. Imagine having to tell him that his request to move one part of a graphic slightly to the right broke your entire design and forced you to start over from scratch. He would fire you on the spot and replace you with an art student from the third world willing to work for minimum wage. There’s also the noticeable fact that despite all the uproar no artist has actually lost their job to AI yet.

This was true when wrote the original post, and from what I can tell it’s still true now. Aside from the most menial and repetitive jobs all talk of job replacement by AI remains theoretical. That may change in the future, but I’m still skeptical.

After experimenting with SD for a week or so and thinking about all this, all the popular AI art talking points started to sound like they came from people with no real hands-on experience with the existing technology. The idea that AI art was spontaneous and required no human effort, mentioned above, was clearly ridiculous. The popular theory that AI would naturally replace human artists doing graphic design work and other forms of corporate art also became hard for me to believe. Trying to complete a specific time-sensitive request for a paying client using the processes mentioned above would be a complete nightmare, especially if it were something like a logo that required a “clean” style free from random noise. Imagine being an AI artist and telling your boss that despite you pulling an all-nighter your company’s software just wouldn’t generate the graphic he requested. Imagine having to tell him that his request to move one part of a graphic slightly to the right broke your entire design and forced you to start over from scratch. He would fire you on the spot and replace you with an art student from the third world willing to work for minimum wage. There’s also the noticeable fact that despite all the uproar no artist has actually lost their job to AI yet.

This was true when wrote the original post, and from what I can tell it’s still true now. Aside from the most menial and repetitive jobs all talk of job replacement by AI remains theoretical. That may change in the future, but I’m still skeptical.

On the other hand, the rhetoric about AI art not being a legitimate medium also rang hollow. Trying to create AI art myself quickly disproved the idea that the AI artists who were able to generate beautiful images didn’t have any skill or put any effort into their art. It took a lot of effort to make something I was satisfied with and I never felt like I was actually interacting with an intelligent entity. I did a few experiments to test the accusation that AI was stealing the work of human artists but couldn’t produce anything that matched the style of the artist I gave it. I think this claim comes from the fact that almost all publicized SD prompts include a “by” tag, like “by Van Gogh,” but because of SD’s tendency to forget individual tags the longer a prompt gets and its inability to stick to one specific style the effect isn’t as strong as you might think. Giving the AI something like “a painting by Van Gogh” will produce an abstract image that kind of looks like Van Gogh if you squint, and if you try to add more nouns and adjectives to the prompt the “by Van Gogh” part eventually gets drowned out. There’s a direct influence from human-made art at some level in AI prompting, but unless you’re satisfied with creating very simplistic and abstract images that influence quickly gets mixed in with so many other factors that the result is no longer a copy of an original. I don’t think there are any legal grounds for a copyright infringement case against AI, and even from a common-sense point of view I don’t think you can say it’s ripping anyone off.

The TL;DR here, and the reason I wrote all this out as a response to the post quoted above, is that spending a short time with one specific implementation of AI was enough to make me question everything people were saying about it. I don’t want to say that I no longer trust anything I read about AI, but I’ve definitely developed a healthy sense of skepticism. There’s way too much AI hype and anti-hype going around, and a lot of it seems to come from people who haven’t even tried using the tech in question, much less people with any technical expertise.